EEG analysis

EEG analysis for assessment of affective state

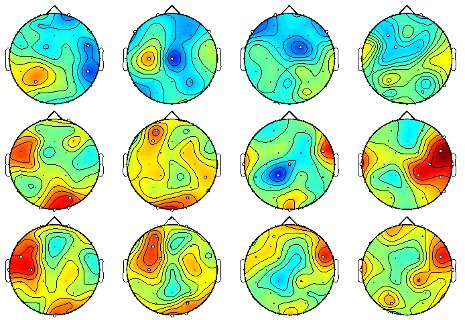

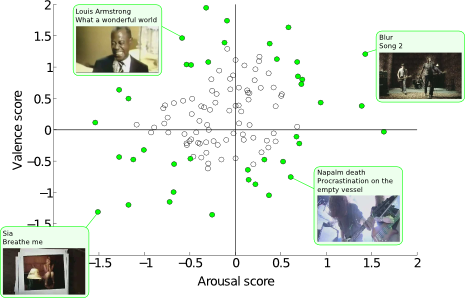

This research is on the use of EEG signal analysis for the assessment of a user's affective state. I performed three experiments (together with researchers from UniGe, UTwente and EPFL) where participants watched a set of music videos. After each video, the participant rates it on the well-known valence and arousal scales. I used power spectral density features in combination with a binary SVM classifier in order to classify the participants EEG signals in to low/high valence/arousal. In the most recent experiment, accuracies of 62.0% and 57.6% were attained for binary valence and arousal classification, respectively. The DEAP dataset collected during this last experiment has been made public and is with 32 participants to the best of our knowledge the largest publicly available dataset containing EEG, peripheral signals and face video. Finally, this technique was used as part of a real-time music video recommendation system.

-

Affective and Implicit Tagging using Facial Expressions and Electroencephalography.

pdf

bibtex

S. Koelstra.

Queen Mary University of London,

2012.

PhD Thesis

@PhdThesis{Koelstra2012affective, title = "{Affective and Implicit Tagging using Facial Expressions and Electroencephalography}", author = "S. Koelstra", institution = "Queen Mary University of London", month = march, year = "2012", note = "PhD Thesis", } -

Fusion of facial expressions and EEG for implicit affective tagging.

pdf

bibtex

S. Koelstra and I. Patras.

In Image and Vision Computing,

2012.

@article{koelstra2012fusion, title={Fusion of facial expressions and EEG for implicit affective tagging}, author={S. Koelstra and I. Patras}, journal={Image and Vision Computing}, year={2012}, publisher={Elsevier} } -

Deap: A database for emotion analysis; using physiological signals.

pdf

bibtex

S. Koelstra, C. Muehl, M. Soleymani, J.-S. Lee, A. Yazdani, T. Ebrahimi, T. Pun, A. Nijholt and I. Patras.

In Affective Computing, IEEE Transactions on,

vol. 3,

number 1,

2012.

@article{koelstra2012deap, title={Deap: A database for emotion analysis; using physiological signals}, author={S. Koelstra, C. Muehl, M. Soleymani, J.-S. Lee, A. Yazdani, T. Ebrahimi, T. Pun, A. Nijholt and I. Patras}, journal={Affective Computing, IEEE Transactions on}, volume={3}, number={1}, pages={18--31}, year={2012}, publisher={IEEE} } -

Continuous Emotion Detection in Response to Music Videos.

pdf

bibtex

M. Soleymani, S. Koelstra, I. Patras and T. Pun.

In International Workshop on Emotion Synthesis, rePresentation, and Analysis in Continuous spacE (EmoSPACE) In conjunction with the IEEE FG 2011,

2011.

@inproceedings{Soleymani, author = {M. Soleymani, S. Koelstra, I. Patras and T. Pun}, booktitle = {International Workshop on Emotion Synthesis, rePresentation, and Analysis in Continuous spacE (EmoSPACE) In conjunction with the IEEE FG 2011}, title = {{Continuous Emotion Detection in Response to Music Videos}}, pages = {803-808}, year = {2011}, abstract={We present a multimodal dataset for the analysis of human affective states. The electroencephalogram (EEG) and peripheral physiological signals of 32 participants were recorded as each watched 40 one-minute long excerpts of music videos. Participants rated each video in terms of the levels of arousal, valence, like/dislike, dominance and familiarity. For 22 of the 32 participants, frontal face video was also recorded. A novel method for stimuli selection is proposed using retrieval by affective tags from the last.fm website, video highlight detection and an online assessment tool. An extensive analysis of the participants' ratings during the experiment is presented. Correlates between the EEG signal frequencies and the participants' ratings are investigated. Methods and results are presented for single-trial classification of arousal, valence and like/dislike ratings using the modalities of EEG, peripheral physiological signals and multimedia content analysis. Finally, decision fusion of the classification results from the different modalities is performed. The dataset is made publicly available and we encourage other researchers to use it for testing their own affective state estimation methods.} } -

Single trial classification of EEG and peripheral physiological signals for recognition of emotions induced by music videos.

pdf

bibtex

S. Koelstra, A. Yazdani, M. Soleymani, C. Muehl, J.-S. Lee, A. Nijholt, T. Pun, T. Ebrahimi and I. Patras.

In Brain Informatics,

2010.

@inproceedings{koelstra2010single, title={Single trial classification of EEG and peripheral physiological signals for recognition of emotions induced by music videos}, author={S. Koelstra, A. Yazdani, M. Soleymani, C. Muehl, J.-S. Lee, A. Nijholt, T. Pun, T. Ebrahimi and I. Patras}, booktitle={Brain Informatics}, pages={89--100}, year={2010}, publisher={Springer} }

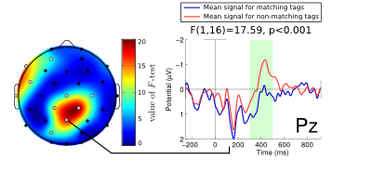

EEG analysis for implicit tag validation

I investigated the use EEG analysis for the implicit validation of tags related to multimedia data. That is, we tried to validate tags for multimedia content, based on the users' brainwaves as they watch the content. I did an experiment showing users a set of videos, along with matching or non-matching tags. As it turns out, there are significant differences in EEG signals between the trials where matching tags are displayed and those with non-matching tags. The QMUL-UT dataset we collected is now made publicly available.

-

EEG analysis for implicit tagging of video data.

pdf

bibtex

S. Koelstra, C. Muehl, and I. Patras.

In Affective Computing and Intelligent Interaction and Workshops, 2009. ACII 2009. 3rd International Conference on,

2009.

@inproceedings{koelstra2009eeg, title={EEG analysis for implicit tagging of video data}, author={S. Koelstra, C. Muehl, and I. Patras}, booktitle={Affective Computing and Intelligent Interaction and Workshops, 2009. ACII 2009. 3rd International Conference on}, pages={1--6}, year={2009}, organization={IEEE} }